In the world of modern PC graphics hardware, all the buzz right now is about a rendering technique call ray tracing. This is mainly due to the release of Nvidia’s RTX development platform and Microsoft announcing its compatible DirectX Raytracing (DXR) API for DirectX 12 for Windows, both having taken place earlier this year. DXR allows Windows developers to utilize modern GPUs to accelerate the process of ray tracing a 3D environment in real time. This is big news for gamers because ray tracing allows for a much more realistic rendering of light and it’s real-world behavior within a 3D scene. Or…it will be as, presently, only a few games have been updated to utilize the rendering features that DXR brings to the table. And there aren’t a lot of GPUs out there yet with hardware designed with DXR in mind, directly targeting the acceleration of ray tracing calculations. Even still, it seems that ray tracing has become the new hotness and it’s even driven some observers fairly well out of their mind. It’s what’s new in tech.

In the world of modern PC graphics hardware, all the buzz right now is about a rendering technique call ray tracing. This is mainly due to the release of Nvidia’s RTX development platform and Microsoft announcing its compatible DirectX Raytracing (DXR) API for DirectX 12 for Windows, both having taken place earlier this year. DXR allows Windows developers to utilize modern GPUs to accelerate the process of ray tracing a 3D environment in real time. This is big news for gamers because ray tracing allows for a much more realistic rendering of light and it’s real-world behavior within a 3D scene. Or…it will be as, presently, only a few games have been updated to utilize the rendering features that DXR brings to the table. And there aren’t a lot of GPUs out there yet with hardware designed with DXR in mind, directly targeting the acceleration of ray tracing calculations. Even still, it seems that ray tracing has become the new hotness and it’s even driven some observers fairly well out of their mind. It’s what’s new in tech.

Or is it?

Reading through today’s tech media, the casual observer could be forgiven for thinking so. One of the first articles I read about Nvidia’s new GPU’s (MarketWatch, Aug. 14) stated,

Nvidia on Monday announced its next-generation graphics architecture called Turing, named after the early-20th century computer scientist credited as the father of artificial intelligence.

The new graphics processing unit (GPU) does more than traditional graphics workloads, embedding accelerators for both artificial-intelligence (AI) tasks and a new graphics rendering technique called ray tracing.

But ray tracing is not a new technique. In fact, it’s almost as old as the earliest of 3D computer graphics techniques.

So, what is ray tracing? As A.J. van der Ploeg describes in his “Interactive Ray Tracing: The Replacement of Rasterization?” [ PDF ],

In computer graphics, if we have a three dimensional scene we typically want to know how our scene looks trough a virtual camera. The method for computing the image that such a virtual camera produces is called the rendering method.

The current standard rendering method, know as rasterization, is a local illumination rendering method. This means that only the light that comes directly from a light source is taken into account. Light that does not come directly from a light source, such as light reflected by a mirror, does not contribute to the image.

In contrast ray tracing is a global illumination rendering method. This means that light that is reflected from other surfaces, for example a mirror, is also taken into account. This is essential for advanced effects such as reflection and shadows. For example if we want to model a water surface reflecting the scene correctly we need a global illumination rendering method. With a local illumination rendering method the light from the water surface can only be determined by the light directly on it, not the light from the rest of the scene and thus we will see no reflections.

…

Ray tracing works by following the path of light. We follow the path of rays of light, i.e. lines of light. For an example of such a path consider a ray of light from your bathroom lightbulb. This particular ray of light hits your chin, some of it is absorbed, and the rest of the light is reflected in the colour of your skin. The reflected ray is then reflected again by the mirror in your bathroom. This ray then hits your retina, which is useful otherwise you would not see your self shaving. In exactly this way a ray of light in the virtual camera gives the colour of one pixel.

The first time the technique known as ray tracing was used to render a scene seems to have taken place around 1972. The Mathematical Applications Group, Inc. (MAGI) was formed in 1966 in order to evaluate exposure to nuclear radiation and did so using software they developed, called SynthaVision, that involved the concept of ray casting, allowing radiation to be traced from a source to its surroundings. By tracing light instead of radiation, SynthaVision was then adapted for use in Computer-generated Imagery or CGI and became what was likely the first ray tracing system ever developed. In 1972 MAGI/SynthaVision was formed by Robert Goldstein, Bo Ghering, and Lerry Elin to drive SynthaVision‘s use in film and television. The first CGI advertisement video, which was for IBM, was done by MAGI in the 1970s as well as the majority of the CGI work in the 1982 Disney film Tron.

In 1985 Commodore released the 7.14MHz, 16-bit Amiga computer with its groundbreaking video graphics capabilities. One of the early demos created for the Amiga that really caught people’s attention was the The Juggler demo. It was a looping, 24-frame animation of a ray traced juggling figure displayed in the Amiga’s unique HAM mode which allowed its entire palette of 4096 colors on screen at once. I remember marveling over the Juggler on my Amiga back in early 1986. The Juggler was rendered frame-by-frame using an experimental ray tracing program called ssg, written by the animation’s creator, Eric Graham. It made such an impact that Graham expanded the program into Sculpt 3D which he brought to market. Sculpt/Animate 4D followed and it inspired a flurry of Amiga-based ray tracers from many different developers.

Hundreds of other ray traced animations soon appeared online on the local dial-up Bulletin Board Systems, rendered on the Amiga as well as many of the other platforms of the day. And, from Tron right up to today, ray tracing is a big part of the creation of basically all CGI films, such as the entire catalogs of Pixar, DreamWorks Animations, and many others.

Something to be aware of here is that all of the animations and films I’ve spoken of are rendered frame-by-frame in a very lengthy process, often involving large arrays of very powerful computers, or render farms. Tron‘s imagery was rendered to film line-by-line, so meager were the computing resources — though mainframes they were — of the time. It took Pixar’s render farm an average of four hours to render a single frame of their early film, Toy Story. That render time has remained fairly consistent at Pixar over the years; as their computing resources have grown, they have increased the render complexity of each frame in each successive production.

What today’s buzz is all about is real time ray tracing. That’s what DirectX Raytracing is concerned with. It utilizes CPU and GPU power to perform certain ray tracing functions, but also supports compute hardware designed specifically to perform ray tracing calculations, such as the Turing microarchitecture GPU at the heart of the Nvidia GeForce 20 series graphics cards. The “RT” cores in a Turing GPU are said to perform ray tracing calculations eight times faster than the GeForce 10 series’ GPUs based on the Pascal microarchitecture. Of course, software (read: games) must support ray tracing, through DXR or another means, in order to deliver these next-level visuals to our retinas. For a look at some such visuals, see DigitalFoundry’s look (video) at a ray tracing-enabled build of Battlefield 5 at this year’s Gamescom.

So, ray tracing. It’s a rendering technique that has been around for over 45 years. It’s nothing new. Finally seeing the benefits of this technology enhance the environments in our games and VR worlds — in real time — thanks to new APIs and dedicated consumer hardware, that’s the New Thing.

You got vitriol for that post? Wow, I guess there are some out there that have nothing better to do than be jerks. One of my friends did some stunning pictures using POV-Ray (http://www.povray.org/) back in the mid-1990s, but they did take a long time to render on the equipment that we had at the time. I think he was able to use the ComSci department’s Sequent Symmetry (I think it had like forty-eight 80386 CPUs) to cut down the render times. Real-time ray tracing could be amazing. I’ll have to ask my friend if he ever thinks about breaking out POV-Ray on new equipment.

Sadly, yes. The problem seems to be that people read the title and the first sentence or two and almost never got through the whole post and went in perturbed.

BTW… I sent a text message to my friend Rob, and he was able to rattle off the specs on the Sequent S81:

“24×386, 24×387, 24xWeitek 1167 (two diff mathcos for optimizing code, selectable in the compiler). 104 meg of RAM, BTW…In 64k chips. BTW the procs & mathcos were all 20MHz…

In case anyone isn’t familiar with 30-year old midrange hardware, the S81 was a shared memory symmetric multiprocessor system (hence the name, go figure…) running a custom Unix called Dynix/PTX which was an AT&T SVR4 derivative. It had a native compiler but we invariably used GNU C/C++.”

He continued on about POV-Ray, “I did run POVray for a little bit, but fell back on my older standbys when I got a huge middle finger from their dev team after I offered to help with a multiprocessor port for speed…”

I should point out that we were students when the Sequent was first installed, but Rob went on to be the department sysadmin. He was there until after the decommission of the system and its replacement by a Sun server (which took up probably 1/6 to 1/8 of the floor space in the machine room.

What a great bit of history and experience there! I remember the Weitek math co-processors, very interesting that they were present here alongside the ‘387 and could be selected in compiling. Thanks for adding this.

POVRay still appears to be going, here’s a github link;

https://github.com/POV-Ray/povray

Pingback: New top story on Hacker News: Ray Tracing Is No New Thing – Latest news

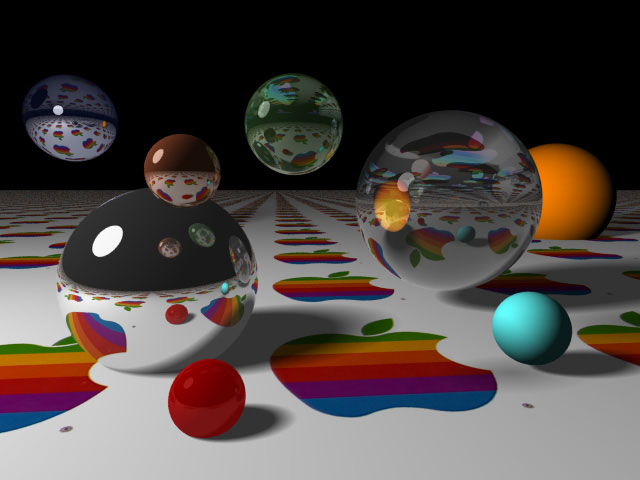

I had ported POV-ray around ’90-92 on a 386 with SCO Unix. I would leave it running over the weekend to render a few frames in a sequence of clouds blowing by in a blue sky reflected by a chrome ball a checked plane. This impressed my coworkers but really those we’re all canned elements I put in a simple scene description file.

> the 7.14MHz, 16-bit Amiga

I believe you mean 32-bit.

Well, it’s a 16/32 processor. It’s 32-bit internally but is sitting on a 16-bit system bus. The Apple IIgs and SNES have a 65C816, which is similarly an 8/16 processor. I’ve always found it interesting that both the Sega MegaDrive/Genesis and the SNES are considered and marketed as “16-bit” systems, when the former sports a 16/32 MC68000 while the latter sports an 8/16 65C816. It’s one or the other, right? The nature of the bus, especially in those days, was such a factor in performance that I guess I’ve taken to thinking of the 68000 as a 16-bit processor. 68020 is where it’s 32-bit in and out, which made quite a difference. (Unless you’re a Mac LC or an Atari Falcon!)

The myth that just won’t die.

The 68000 isn’t 32bit anywhere.

“The myth that just won’t die. The 68000 isn’t 32bit anywhere.”

Jonathan,

Wikipedia accurately describes the matter,

“The design implements a 32-bit instruction set, with 32-bit registers and a 32-bit internal data bus. The address bus is 24-bits and does not use memory segmentation, which made it popular with programmers. Internally, it uses a 16-bit data ALU and two additional 16-bit ALUs used mostly for addresses, and has a 16-bit external data bus. For this reason, Motorola referred to it as a 16/32-bit processor.”

@Blake Patterson:

That Wikipedia entry doesn’t describe the matter accurately.

The 68000 does’t have a 32bit instruction set. It is a 16bit chip with 16bit instructions which implement a very basic degree of vector operability by decoding instructions which lead to it performing two 16bit operations in succession.

These are presented to the programmer as if the are 32bit operands, but they are not, they are 16bit operand pairs which are loaded on and off the CPU in amounts no larger than 16bits.

The trouble with this discussion is the same as it has been since Motorola came up with the idea of branding it 32bit for marketing reasons: It relies on the facts people don’t really understand what makes a chip 16 or 32 bit. What Motorola did was change the definition to suit their own device for marketing reasons.

In fact you can trace the exact historical moment when they attempted to perform that specific piece of technical “hide the lady” to a paragraph in an article published in the April 1983 edition of Byte magazine on page 70:

“Many criteria can qualify a processor as an 8-, 16-, or 32-bit device. A manufacturer might base its label on the width of the data bus, address bus, data sizes, internal data paths, arithmetic and logic unit (ALU), and/or fundamental operation code (op code). Generally, the data-bus size has determined the processor size, though perhaps the best choice would be based on the size of the op code”.

The subterfuge can be roughly translated as: “Oh, how do you know how many bits there are on a CPU? It’s so hard, it can be done in so many different ways, here are a lot of confusing examples. Let me clarify how by offering a way which cuts the through the confusion and favours our own device”.

Its author, Tom Starnes, poses as an “Analyst” but is in fact a marketeer. The hidden lady is right there in the words: “Generally, the data-bus size has determined the processor size”.

He does nothing but try to switch user attention away from how the industry and CPU builders and systems manufacturers measure these things and instead by a trick of the eye asks the reader to look at a feature where the correct answer is not found, because it means they win.

The 68000 has a 16bit ALU and a 16bit data bus. It connects to 16bit RAM on 16bit motherboards. There is literally a single type of instruction which does on-chip register-to-register copies which take 4 cycles to move 32bts. That’s the facts of the matter. You can’t get from that single category of instruction to “so therefore it’s 32bit”. It all dates back to marketing. Not the chip design.

Gorgeous post. Thanks.